Frontier Math: Measuring Mathematical Problem Solving

In the ever-evolving landscape of artificial intelligence (AI), the ability to reason and solve complex mathematical problems has become a critical benchmark for evaluating advanced AI capabilities. Among the various tools developed for this purpose, Frontier Math stands out as a pioneering benchmark designed to assess the limits of AI in mathematics. This post delves into the significance of Frontier Math, its structure, and its implications for understanding AI progress, particularly in light of recent advancements demonstrated by OpenAI's new model, o3.

The Importance of Frontier Math

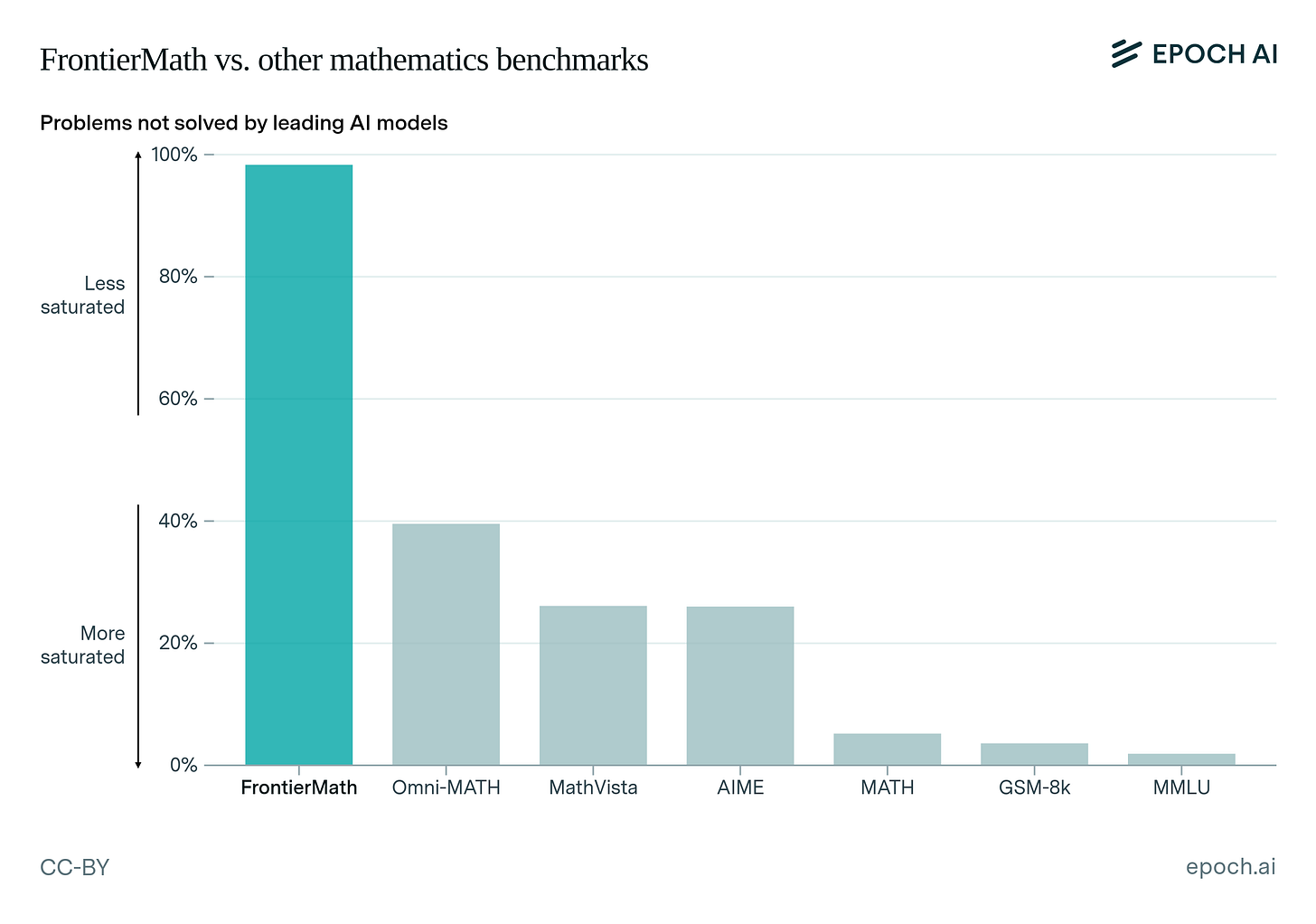

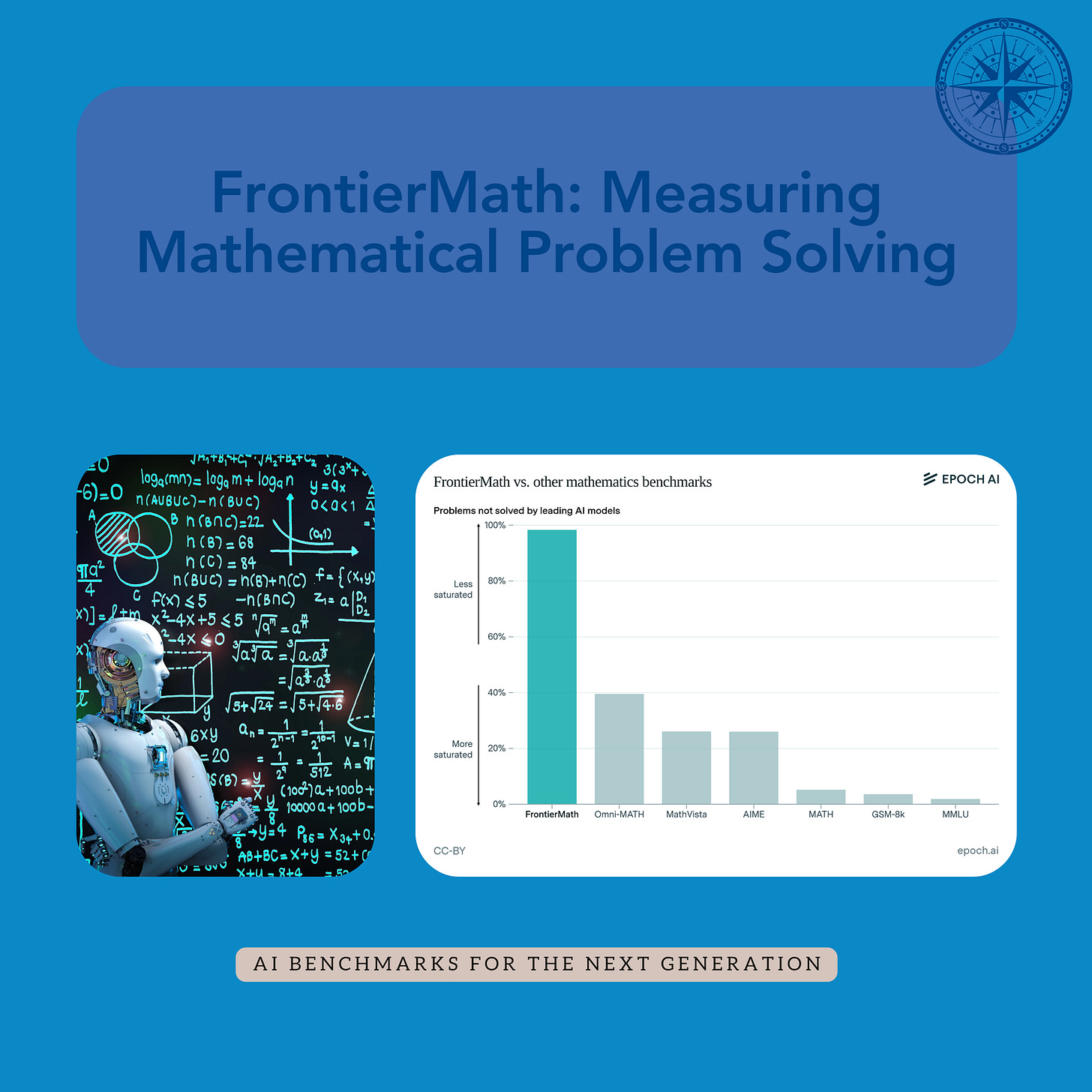

Frontier Math was introduced in a paper first published by Epoch AI in November 2024, to address a significant gap in existing AI benchmarks. Traditional benchmarks, such as MATH and GSM8K, have become saturated; many AI models now achieve near-perfect scores on these tests, which often consist of simpler problems that do not adequately challenge advanced reasoning capabilities. In contrast, Frontier Math comprises hundreds of original and unpublished problems crafted by a team of over 60 mathematicians from prestigious institutions like MIT and UC Berkeley. These problems are specifically designed to push AI models to their limits, requiring deep mathematical knowledge and innovative problem-solving approaches.

The benchmark's complexity lies in its interdisciplinary nature, covering approximately 70% of modern mathematical fields, including number theory, algebraic geometry, and combinatorics. Each problem demands not just computational skills but also a profound understanding of mathematical concepts and the ability to apply them creatively. This makes Frontier Math a robust tool for evaluating whether AI systems can engage in genuine mathematical reasoning akin to that of human experts.

Structure and Design of Frontier Math

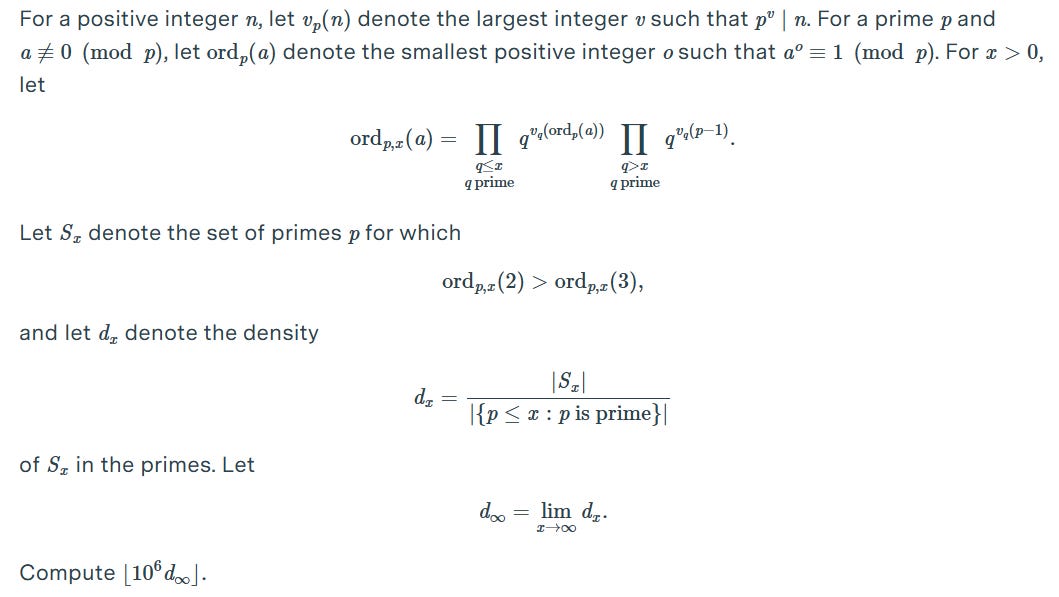

Frontier Math is unique in its approach to problem formulation. Each problem is designed to be challenging enough that even expert mathematicians may require hours or days to arrive at a solution. The benchmark emphasizes "guess-proof" questions that resist simple pattern recognition or brute-force strategies commonly employed by AI systems. For example, one problem might involve constructing high-degree polynomials with specific properties contextualized within geometric scenarios. Such tasks necessitate not only advanced computational abilities but also a creative application of algebraic geometry principles.

The design criteria for Frontier Math problems focus on several key aspects:

Originality: Problems are unpublished and crafted specifically for this benchmark.

Difficulty: They are intended to be significantly more challenging than those found in traditional benchmarks.

Verifiability: Solutions can be rigorously evaluated using automated verification systems, ensuring objective assessments without human bias.

This rigorous design process ensures that Frontier Math can effectively measure the progress of AI models as they strive toward expert-level mathematical reasoning. This makes FrontierMath a credible and legible marker for progress in mathematical reasoning.

Examples from the Benchmark

To better understand the nature of challenges posed by Frontier Math, consider some representative examples:

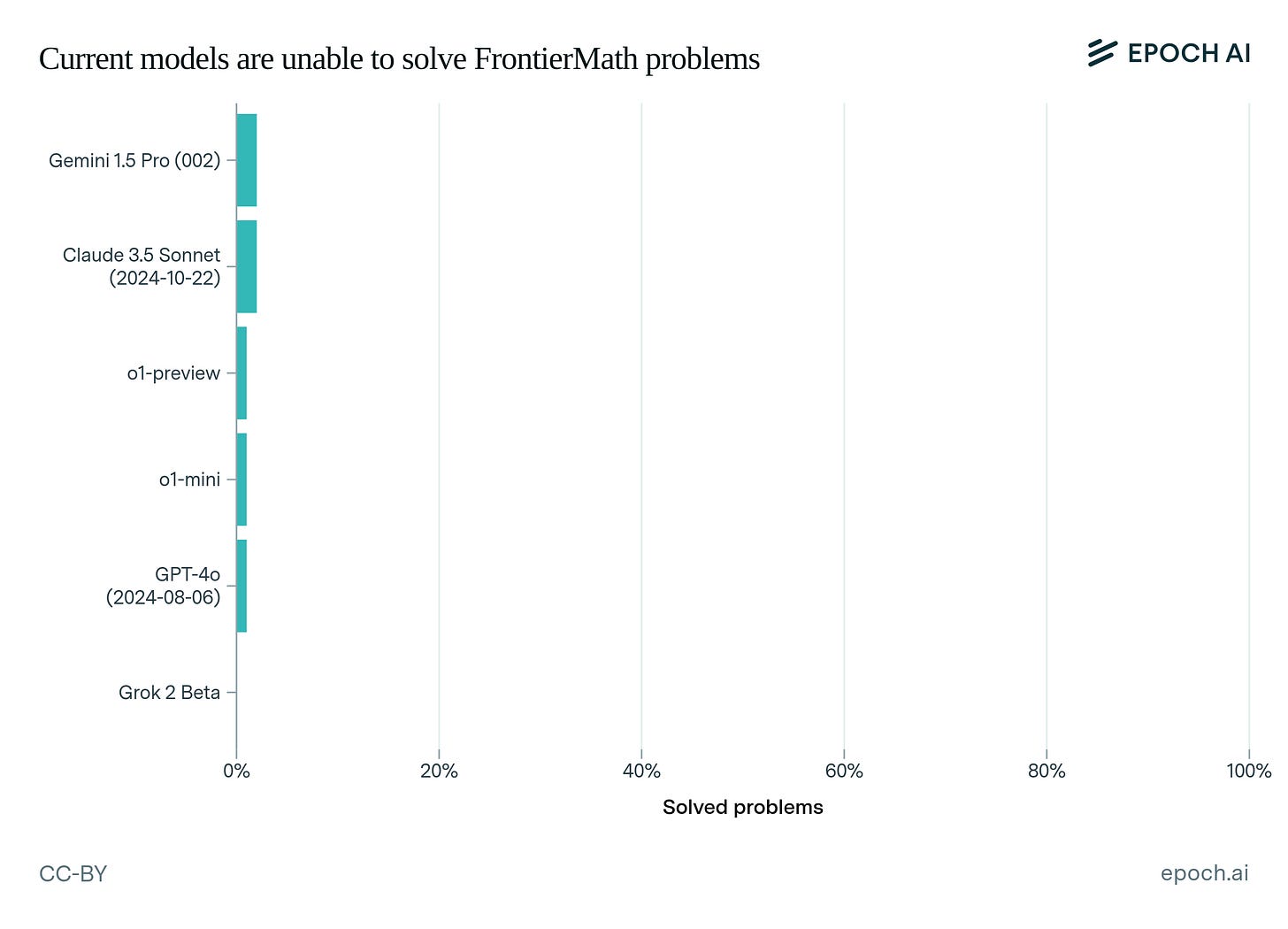

Artin's Conjecture on Primitive Roots: This high difficulty problem requires an understanding of both number theory and algebraic structures. Solving it necessitates innovative approaches that go beyond standard techniques.

High-Degree Polynomial Construction: A high-medium task involving the creation and verification of high-degree polynomials with specific properties requires both computational efficiency and deep theoretical insights.

These examples illustrate how Frontier Math transcends traditional benchmarks by demanding multi-step logical reasoning akin to research-level mathematics. This is evident by the reactions of at least two Fields medalists who suggested that the hardest questions in the benchmark were "extremely challenging" and "well beyond what we can do now".

Performance of Current Models on FrontierMath

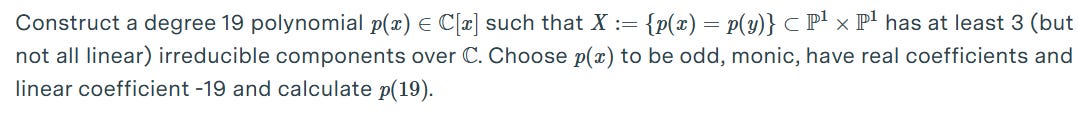

Despite the advanced capabilities of modern AI systems, FrontierMath has proven to be an exceptionally challenging benchmark. The evaluation process for this benchmark is designed to give AI models the best possible chance at success. Models are provided with ample time for reasoning and are given access to a Python environment where they can write and execute code, test hypotheses, verify intermediate results, and refine their approaches based on immediate feedback.

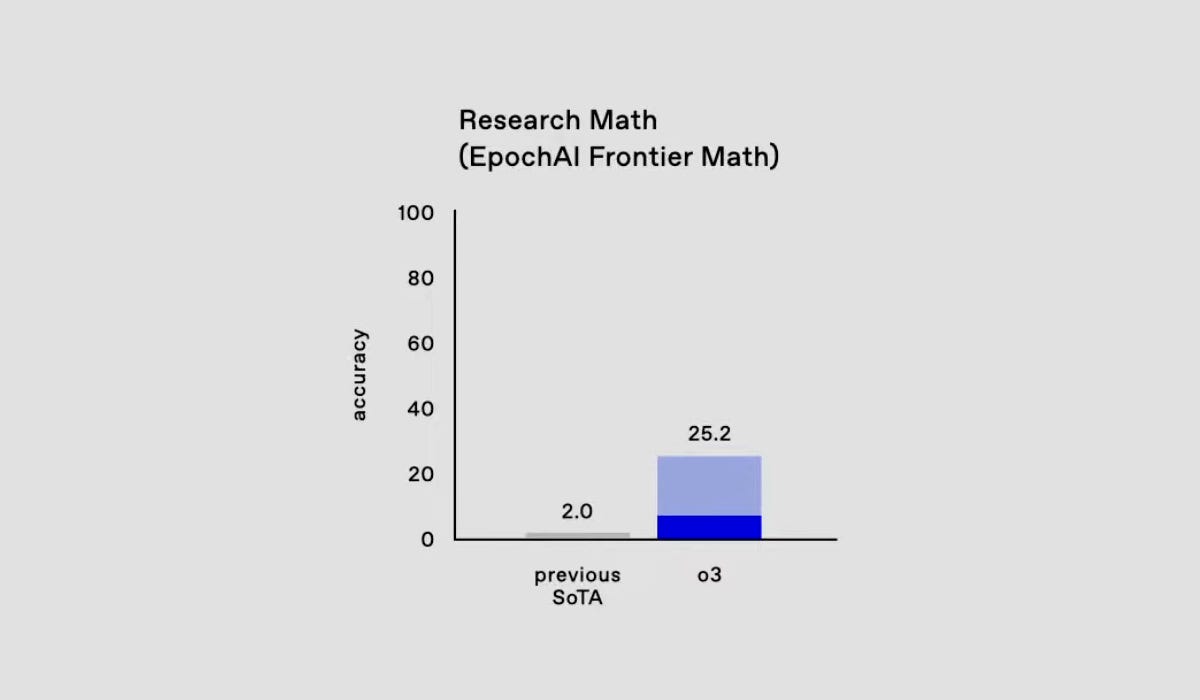

However, even with these supportive conditions, the performance of leading AI models on FrontierMath has been strikingly low. Epoch AI evaluated six of the most advanced language models available, including Claude 3.5 Sonnet, o1-preview, GPT 4o, and Gemini 1.5 Pro. None of these cutting-edge models could solve more than 2% of the problems presented in the FrontierMath benchmark.

The low success rate on FrontierMath revealed a significant gap between the current capabilities of AI systems and the level of mathematical reasoning required for research-level mathematics. While AI had made impressive strides in many areas, including some aspects of mathematical problem-solving, FrontierMath demonstrates that there is still a considerable distance to cover before AI can match the depth of understanding and creative problem-solving abilities of expert human mathematicians.

Results from OpenAI's o3 Model

A notable development in the context of Frontier Math is OpenAI's introduction of the o3 model, which has achieved unprecedented results on this benchmark. In December 2024, OpenAI announced that o3 solved 25.2% of the problems presented in Frontier Math—an extraordinary leap from previous models that struggled to solve even 2%. This performance not only highlights significant advancements in AI reasoning capabilities but also raises important questions about what these results mean for the future of AI development.

The success of o3 can be attributed to its enhanced architecture and training methodologies that prioritize complex reasoning tasks. Unlike earlier models that relied on "predicting" the solution directly, current public information suggests that reasoning models like o3 simulate an entire chain of thought process alongside a process of generating multiple responses internally and then evaluating them to pick the best response. This makes them exceptionally well-suited to be used for complex reasoning tasks such as those present in the FrontierMath dataset.

However, there are still doubts regarding whether these results are actually accurate or representative of the model's performance. Public access to these models is still not available and prior experience suggests that performance by advanced models is often brittle - while they perform certain tasks very well a lot of the time, they fail in completely unexpected manners outside those instances. This places strong limits on the utility of these models. In light of the reveal of OpenAI's funding of FrontierMath there have also been accusations that OpenAI trained or finetuned o3 on the benchmark data before testing it. Regardless, only independent testing and evaluations can confirm or deny any claims about the model.

Critical Examination of Frontier Math

While Frontier Math represents a significant advancement in evaluating AI capabilities, it is essential to critically examine its limitations and implications. One concern is the focus on verifiable solutions at the expense of open-ended exploration—a vital aspect of modern mathematical research. Many contemporary mathematical problems do not have straightforward answers or require lengthy proofs that cannot be easily verified through automated systems. As such, while Frontier Math provides valuable insights into AI's problem-solving abilities, it may not fully capture the nuances of human mathematical reasoning.

Additionally, the benchmark's reliance on unpublished problems raises questions about data contamination—an issue prevalent in many existing benchmarks where models may inadvertently train on test data. By using entirely new problems crafted specifically for this evaluation, Frontier Math mitigates this risk. However, transparency regarding the development process and relationships with AI companies is crucial to maintaining trust within the research community. A controversy arose when it was revealed only after the release of o3 that OpenAI had funded this effort. While by itself this doesn't necessarily affect the relevance of the benchmark or invalidates the results we have seen, the funding of any such critical effort should be known from the get-go.

Conclusion

Frontier Math stands as a critical milestone in our efforts to evaluate advanced AI capabilities in mathematics. By presenting genuine challenges that require deep reasoning and creativity, it offers a unique lens through which we can measure progress in artificial intelligence. The remarkable performance of OpenAI's o3 model underscores both the potential and limitations inherent in current AI systems as they strive toward expert-level reasoning.

As we look ahead, ongoing collaboration between mathematicians and AI researchers will be vital in shaping future benchmarks that capture the full spectrum of mathematical inquiry while pushing the boundaries of what artificial intelligence can achieve.

In our next post, we will explore an often-overlooked aspect of AI development: the data workers powering the training of advanced models and the challenges they face.

References

[1] Epoch AI, 2024. FrontierMath. https://epoch.ai/frontiermath

[2] TechCrunch, 2024. OpenAI announces new o3 models. https://techcrunch.com/2024/12/20/openai-announces-new-o3-model/

[3] Epoch AI, 2024. FrontierMath: A Benchmark for Evaluating Advanced Mathematical Reasoning in AI. https://arxiv.org/abs/2411.04872

[4] MATH Benchmark (Math Word Problem Solving). https://paperswithcode.com/sota/math-word-problem-solving-on-math

[5] GSM8K Benchmark (Arithmetic Reasoning). https://paperswithcode.com/sota/arithmetic-reasoning-on-gsm8k

[6] The Decoder, 2025. OpenAI quietly funded independent math benchmark before setting record with o3. https://the-decoder.com/openai-quietly-funded-independent-math-benchmark-before-setting-record-with-o3/

[7] Epoch AI. AI Benchmarking Hub. https://epoch.ai/data/ai-benchmarking-dashboard